Welcome back, Spectrum Visionaries.

Between Razer's $600M holographic AI and Clone Robotics' hand that learns by watching, vision hardware is getting smarter. Meanwhile, traditional cameras drain batteries fast, and the fix comes from biology.

This issue covers holographic desk companions, vision-trained robotic hands, and why frameless cameras will dominate edge AI by 2030.

THE AI UPDATE

Razer launches 5.5-Inch holographic AI avatar:

Gaming giant unveiled Project AVA at CES, a 5.5-inch AI hologram desk companion. Running on xAI's Grok, it handles everything from scheduling to gaming strategy. The company also revealed Project Motoko, a headset that delivers AI through audio rather than smart glasses. Both projects are part of Razer's $600 million AI investment. Watch the holographic assistant in action here.Viral startup's most lifelike robotic hand learns by watching humans:

Clone Robotics dropped a demo of its new robotic hand that mirrors human movements with lifelike grip strength and speed.

The hand uses a neural network trained on hours of human hand footage, learning motion in real time rather than programming every finger movement. Using water-powered Myofiber muscles tested for 650K cycles without fatigue, making it the most durable vision-trained hand in public demos.

THE DEEP DIVE

Frameless Vision: The 2030 Architecture for Edge AI

By 2030, edge AI will hit $66.47 billion across 150 billion intelligent edge devices. But these sensors won't see the way cameras have for 150 years. Traditional vision burns too much power, and frameless vision is replacing it.

Why frames don't work for edge AI. A warehouse camera films a shelf at 60 frames per second. That's 3,600 photos every minute, even when nothing moves. Each frame burns 5 watts analyzing billions of identical pixels.

Now multiply that across millions of drones, smart glasses, and robots running on coin-sized batteries. Edge AI can't scale if cameras drain devices in hours instead of days.

Biology solved it first. Our eyes’ retinas only fire signals when something changes, like a car moving or someone walking past. That's why human vision uses just 30% of your brain's 20-watt budget.

Event cameras mimic the eyes. Each pixel fires only when light changes, so static scenes generate zero data, cutting energy costs by up to 90%. They react in microseconds and handle extreme contrasts, like your eyes adjusting from darkness to daylight.

Robots navigate terrain in real time while autonomous vehicles spot pedestrians 20 milliseconds faster, and surgical systems track movement to the millimeter. Event sensors paired with neuromorphic chips are already powering the next generation of edge devices.

Takeaway: For 150 years, we forced machines to see like cameras. Event-based vision finally mimics biological vision, making AI "awake" without draining the battery. By 2030, the frame will be the limitation everyone left behind.

IN THE LOOP

What’s trending in the Space

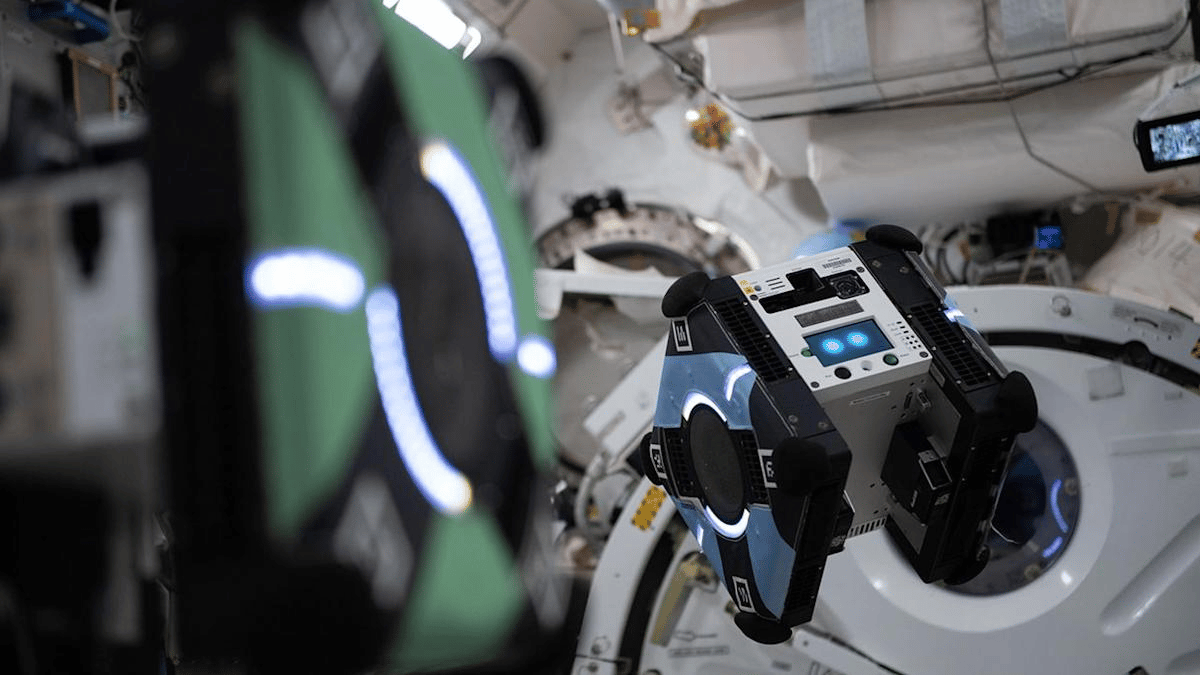

Stanford’s robot aboard the International Space Station became the first to navigate on its own, moving through tight corridors 50% faster without human help, preparing for Mars missions where Earth control has a 20-minute delay.

Researchers published a bionic sensor in Nature that mimics the human eye's focusing mechanism, giving self-driving cars 15 times sharper vision by concentrating on what matters most.

An Australian tiny underwater robot disappeared under Antarctica's ice for nine months and came back functional, carrying climate data from a “never accessed” region of the planet.

Allen Institute's Molmo 2 video AI built an open-source model that beats models 9 times its size, tracks objects through entire video clips better than Google's system, and anyone can use it for free.

UCLA engineers created a headset that reads brain signals and helps paralyzed patients, enabling faster cursor control and robotic arm manipulation without surgical implants.

What did you think of today’s issue?

Hit reply and tell us why; we actually read every note.

See you in the next upload