Welcome back, Spectrum Visionaries.

AI is hitting a strange ceiling and it's not compute, not chips, but text itself. By 2026, LLMs will run out of data to read and video games will train AI instead. While researchers use games to teach machines how reality works, a 100-person startup beat Google and OpenAI at video generation, and Disney's building robots that will greet you at Disneyland.

This issue covers Runway's surprise win, Google's vision-first Pixel 10 bet, why video games are becoming the proving ground for embodied AI.

THE AI UPDATE

Google bets on AI Vision to sell Pixel 10:

The headliner at "Made by Google" 2025 was the Pixel 10 lineup, four new phones with identical designs where AI vision is the main attraction. New additions include prompt-based photo editing, Gemini-powered visual adjustments in Messages, and "Visual Overlays" for the camera.The shift signals that phone wars are no longer about lenses but about which AI can best understand and transform what you see.

100-person startup Runway outranks trillion-dollar giants:

Runway Gen-4.5 claimed the top spot on the video leaderboard, beating trillion-dollar giants Veo 3 and Sora 2. The model leads in physics accuracy and realistic human movement. Unlike LLMs that predict the next word, vision models like Gen-4.5 predict how light, motion, and gravity interact frame by frame.Disney's Olaf robot is headed to Disneyland Paris:

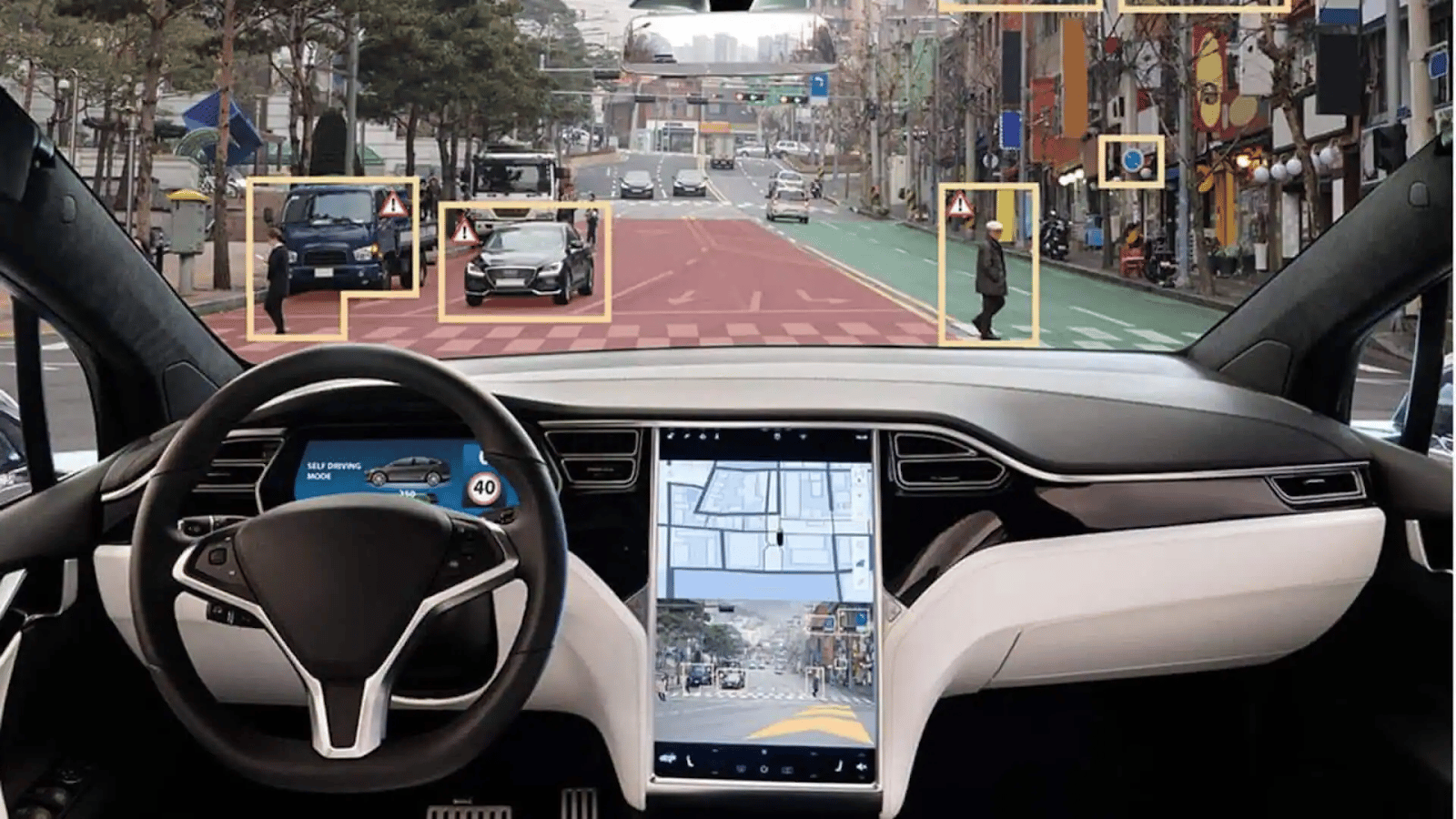

The iconic Frozen character is stepping off the screen and into real life as a lifelike robot that holds conversations, makes eye contact, and moves with fluid, human-like motion. Built on NVIDIA and DeepMind's Newton framework, Olaf will greet guests at Disneyland Paris. The same technology powering self-driving cars is now bringing animated characters to life.

THE DEEP DIVE

What happens when AI runs out of text to read? It starts playing games

LLMs are on track to exhaust the internet's text data by 2026 but without fresh training data, how will AI continue to improve? Some experts believe the solution is video games.

Why games work.

Video games generate unlimited visual data showing AI exactly how the physical world operates. Movement, physics, cause-and-effect, and spatial reasoning are all delivered in clean datasets without the messy errors plaguing real-world footage. And unlike expensive camera rigs or human annotations, games generate infinite training scenarios at zero marginal cost.

Meet World Models.

Unlike LLMs that predict the next word, world models learn by watching how objects move, collide, and interact. They build physics engines that understand cause-and-effect in visual space. It's the difference between reading about gravity and actually seeing how it works.

The tech giants are already making moves.

General Intuition and DeepMind's Sima 2 are using game data to train robots and AI agents through observation alone. World Labs' Marble generates entire environments from simple prompts. Even the godfather of AI, Yann LeCun, left Meta to build world models full-time.

The Takeaway?

Warehouse robots need to understand how boxes stack, self-driving cars need to predict pedestrian movement, and humanoids need to grasp door handles. None of that comes from reading Wikipedia but from seeing how the world actually behaves.

World models trained on game data are the bridge to embodied AI that works in the real world.

IN THE LOOP

What’s trending in the Space

NVIDIA unveiled Alpamayo-R1 at NeurIPS 2025, the first open-source vision-language-action model for autonomous driving that integrates visual perception, reasoning, and trajectory planning for complex road scenarios.

AI computer vision market is projected to double from $56.4B in 2025 to $117B by 2030, driven by deep learning breakthroughs and surging demand in surveillance and automation.

RealSense partnered with AVerMedia to launch the SenseEdge AI Vision Kit, combining depth cameras and NVIDIA Jetson to accelerate humanoid and mobile robot deployment.

ClearSpot.ai brings edge AI vision to drones, enabling real-time anomaly detection for US infrastructure inspections without cloud latency.

The White House AI Action Plan prioritizes AI data centers and high-security compute for DoD vision systems, accelerating domestic computer vision leadership.

What did you think of today’s issue?

Hit reply and tell us why; we actually read every note.

See you in the next upload